A “True Lifecycle Approach” towards governing healthcare AI with the GCC as a global governance model

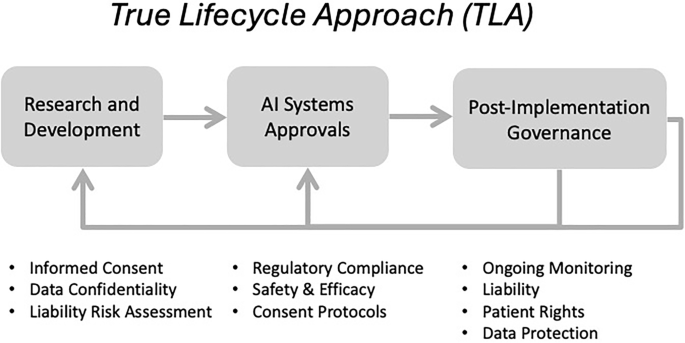

To address the shortcomings of existing governance models, the TLA must begin with a strong foundation in the research and development phase. The earliest phase of R&D provides an opportunity to encourage best practices that account for local considerations. Between 2021 and 2024, a multidisciplinary research team at HBKU, Qatar, created the “Research Guidelines for Healthcare AI Development,” with the MOPH (Ministry of Public Health) undertaking an official advisory role in the project3. These guidelines provide non-binding guidance to researchers developing AI systems in their healthcare-related research for subsequent use in the healthcare sector. The project also developed a draft certification process, with future consultations set to establish a more permanent certification process for researchers who comply with the guidelines. The purpose of certification is to give AI systems a mark of credibility and confidence for stakeholders interfacing with the system post-implementation that certain standards were followed, which accounted for patient safety, amongst other things.

Underlying the guidelines are broader efforts in the GCC to train professionals with a combined understanding of AI technology, ethics, and law. In Qatar, for example, this is evident in the incorporation of AI ethics and legal considerations into medical and healthcare training programs at institutions like Qatar University24. Furthering this effort, specialized training programs and workshops, such as the MOPH National Research Ethics Workshop on AI in Healthcare25, are equipping professionals with the necessary skills to navigate the complex landscape of AI in healthcare. The recent development of local large language models, like QCRI’s Fanar26, demonstrates a commitment to addressing the societal and ethical implications of AI within the specific regional context. This developing expertise is also important for successfully weaving new governance requirements into the R&D ecosystem.

Thus, several gaps identified in studies can be addressed by the Qatar guidelines27. For example, there are gaps in common standards for reporting guidelines in clinical AI research. Some researchers have developed their own AI guidelines for application in specific healthcare systems28. Other research has outlined similar structures to the Qatar guidelines to reduce biases in the conception, design, development, validation, and monitoring phases of AI development29. The Qatar guidelines are more comprehensive, covering a broad range of best practices beyond bias mitigation and providing a precise and actionable framework. This ensures compliance with regulatory demands and aims to safeguard patients from potential harm caused by biased or unreliable AI systems.

The guidelines are organized into three stages: development, external validation, and deployment. These reflect the stages of creating an AI system intended for use in the healthcare sector, from its initial development to its deployment. The development stage requires detailed documentation of model origins, intended use, and ethical considerations. Each jurisdiction will differ in the specific matters it seeks its guidelines to address at the development stage. Indeed, it is crucial that guidelines are adapted for the local context to ensure that the AI system is applicable to its target population.

Qatar has a very diverse population, so specific attention is given to underrepresented groups where AI may be used in their care, including migrant workers and minority populations. One purpose of this is to address the risks of AI bias that could lead to inequitable or discriminatory treatment. For example, AI systems developed for clinical decision support (CDS) in the Arabic or English language would omit the vast majority of Qatar’s population, where neither Arabic nor English are an individual’s first language. Under the principle of ‘fairness’ and the requirements on ‘data and ethics factors’, developers would be required to document how their AI systems accommodate patients who may not speak Arabic or English fluently, ensuring that crucial healthcare information remains accessible.

Further, the guidelines require that AI systems should be validated against local and international ethical standards. Local AI standards in Qatar have been established in a recent report by the World Innovation Summit for Health (WISH) providing Islamic perspectives on medical accountability for AI in healthcare30. Unlike other frameworks, which often address ethical issues reactively, Qatar’s guidelines ensure that such concerns are mitigated before approval or deployment, with the aim of reducing potential regulatory friction. For patients, this means AI systems deployed in healthcare take into account ethical considerations that encompass cultural and societal expectations.

The aim is also to bridge the often-isolated silos between development and external validation. For instance, researchers should establish their compliance with Qatar’s data protection law (Law No. 13 of 2016) alongside global frameworks such as the General Data Protection Regulation (GDPR)31. This emphasis on detailed documentation and compliance with legal and ethical requirements during the R&D phase aims to support smoother transitions into the approval and post-implementation stages of the TLA. Maintaining records at the R&D phase helps preemptively align with regulatory demands for safety, efficacy, and ethical compliance typically assessed during the medical device approval process for relevant systems. By addressing these considerations early, researchers reduce the risk of delays or rejections during approval while ensuring systems are better positioned to meet the requirements of medical device regulators.

Moreover, requirements in the Qatar guidelines for explainability lay the groundwork for addressing the “black-box” problem in post-implementation governance in the third phase of the TLA. For example, the guidelines mandate documenting classification thresholds and provide requirements for explaining AI decision-making processes32. These feed into post-market monitoring obligations, such as ensuring system reliability, enabling contestability, and providing avenues for redress. For patients, this should mean that decisions affecting their care will include clear explanations and incorporate accountability mechanisms.

In this way, Qatar’s approach exemplifies the interconnectedness of the true lifecycle framework. By focusing on ethical rigor, inclusivity, and explainability at the R&D stage, the guidelines prioritize protecting patients and their interests, ensuring that each regulatory phase reinforces and builds upon the others. The rigorous documentation and validation during the R&D phase create a foundation for smoother regulatory approvals, as seen in the SFDA’s framework, which is uniquely tailored to AI’s complexities.

link